This Isn't Your Meme: Understanding Privacy Tech's Two-Layer Reality

A Guide to Recognizing When You're the Product in Narrative Markets

Further to

and re-incorporating the gender dynamics from

explaining

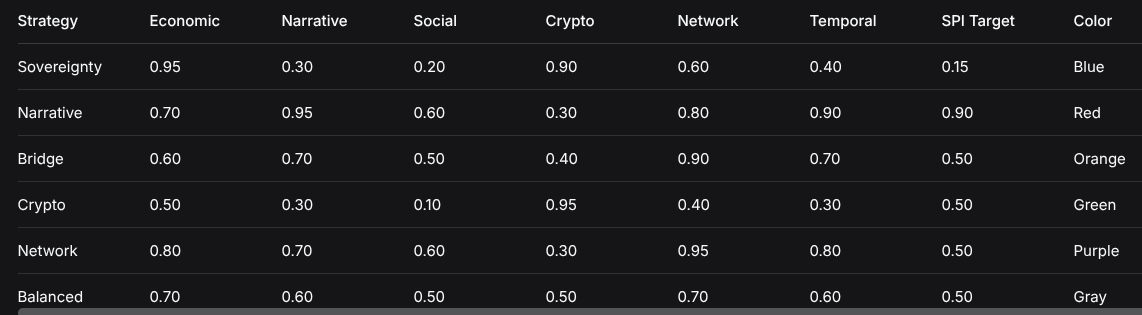

via economic incentives in network formation. The anti-correlation of the security focused type was explored in more detail. It turns out that security types are somewhat positively correlated with bridge agents (partially corrupted narrative weavers with strong plausible deniability, very high influence due to between-ness, and able to navigate ambiguity) to provide plausible deniability up until a corruption regime of between 80-100%: where the requirement for plausible deniability rapidly diminshes. The narrative strategy was found to dominate in both high and low SPI environments, whereas bridge agents were found to be most successful in the middle ground of navigable ambiguity. When SPI gets very high bridge agent utility decreases (no ambiguity) they either become fully corrupted or fork, or very low (no signals to navigate by) where their margins are low. The sovereign strategy was again found to be robust and resilient, but never the most successful, across all regimes. Transitions between regimes were abrupt and cascading pseudo black swan events. This highlights another possible dual meaning with respect to ‘Lunarpunk and the Dark Side of the Cycle’. Again centrality and corruption proved powerful and irresistible, yet also diminished overall network fitness.

This, again, illuminates, with a magic eye, so to speak, many counter-inuitive principles regarding mimetic warfare, in the Neo Dark Age, in the White Hearted Dark Forest, with respect to the over-riding principles of the Lunarpunk Endgame.

Simulation carried out in a Jupyter notebook, which is again available on Google Colab. Write up created with Deepseek.

Executive Summary: Privacy Tech Memes & Whale Signaling Dynamics

The Two-Layer Reality

The simulation reveals privacy tech operates in two simultaneous narrative layers:

Layer 1: Chaotic Surface (Retail/Enthusiasts)

What you see: “Privacy is a human right,” anti-surveillance memes, technical evangelism

Amplification mechanism: Social validation, emotional resonance, tribal identity

Failure rate: High - most memes die in the noise

Who participates: Retail, enthusiasts, idealists

Layer 2: Controlled Undercurrent (Whale/Institutional)

What you don’t see: Economic signaling, regulatory arbitrage discussions, capital deployment timing

Amplification mechanism: Economic concentration, endorsement hierarchies

Success mechanism: Coded language, insider recognition

Who participates: Whales, VCs, regulatory arbitrage specialists

What the Little Guy Should Watch For

1. Sentiment Baiting Patterns

Simulation shows: Whales amplify retail sentiment to create exit liquidity.

Watch for:

Sudden meme virality without clear grassroots origin

Influencer pile-ons timed with unusual trading volume

Narrative consistency that feels too perfectly manufactured

Emotional framing that bypasses critical thinking

2. The Bridge Agent Squeeze

Simulation dynamics: Moderates get squeezed out by both regimes.

Observe:

Nuanced voices disappearing from privacy discussions

Balanced perspectives being drowned by extremism

Technical debates shifting to ideological purity tests

The middle ground becoming unoccupied territory

3. Timing Mismatch Signals

Simulation finding: Different regimes have different temporal patterns.

Notice when:

Whale narratives appear during retail euphoria phases

“Institutional adoption” talk peaks during distribution periods

Regulatory compliance discussions spike before regulatory actions

Privacy advocacy amplifies during surveillance crackdown announcements

The Meme-as-Bait Framework

Phase 1: Retail Meme Launch

What happens: Privacy memes circulate in chaotic regime

Purpose: Build grassroots sentiment, create narrative momentum

Whale position: Often accumulating quietly during this phase

Phase 2: Whale Signal Alignment

What happens: Coded language enters controlled channels

Purpose: Signal insider understanding, coordinate capital moves

Retail position: Still engaged with surface narrative

Phase 3: Narrative Inversion

What happens: Original meme gets co-opted or contradicted

Purpose: Create confusion, facilitate position adjustments

Outcome: Retail left holding narrative bag, whales execute planned moves

Specific Privacy Tech Red Flags

Monero Case Study Patterns

Watch for:

“Counter-correlation” narratives during market stress

Influencer name-drops without corresponding educational content

“Institutional adoption imminent” rumors without verifiable evidence

Privacy vs. compliance debates that ignore regulatory realities

DarkFi/Stealth Mode Project Patterns

Notice:

Dual-language projects: Revolutionary rhetoric + institutional framing

Poetic abstraction: Cybernetic metaphors that obscure economic mechanics

“Network state” discussions that ignore existing capital concentration

Asymmetric communication: Different messages for different audiences

The Front-Running Sentiment Playbook

How Whales Use Retail Memes

Sentiment harvesting: Monitor chaotic regime for emerging narratives

Selective amplification: Boost narratives that align with existing positions

Narrative co-option: Reframe grassroots memes as institutional signals

Exit facilitation: Use amplified sentiment as distribution channel

What This Looks Like in Practice

Step 1: Retail meme emerges (”XMR to $1k!”)

Step 2: Whale channels discuss “privacy as bear market hedge”

Step 3: Influencers signal “institutional interest” in privacy tech

Step 4: Volume spikes, retail FOMO intensifies

Step 5: Whales distribute into retail enthusiasm

Step 6: Narrative shifts to “regulatory concerns” or “technical limitations”

Defensive Posture Recommendations

For the Little Guy

Assume until proven otherwise:

Every viral privacy meme is potential whale bait

Influencer endorsements are often position-adjacent

“Institutional adoption” narratives serve distribution purposes

Regulatory discussions reflect regulatory capture realities

Verification Protocols

Before engaging with privacy narratives:

Check timing: Is this narrative convenient for someone’s position?

Track amplification: Who’s boosting this and what’s their track record?

Look for contradictions: Surface narrative vs. economic realities

Monitor whale channels: Are controlled regime signals aligning?

Survival Strategies

Delay engagement: Let narratives mature before adopting

Verify independently: Don’t rely on amplified channels

Watch flows, not words: Trading volume patterns reveal more than tweets

Understand regimes: Know which layer you’re operating in

The Reality Check

Empirical Pattern

Privacy tech narratives that succeed in chaotic regimes often serve controlled regime interests.

Simulation Explanation

High SPI (controlled) systems:

Define success metrics that favor their narratives

Amplify selectively based on economic alignment

Use chaotic regime as sentiment farm and exit liquidity

Practical Implication

Retail enthusiasm for privacy tech is:

Necessary for project viability (chaotic regime participation)

Exploitable for capital deployment and distribution (controlled regime advantage)

Often misaligned with actual project trajectories

Bottom Line

The simulation demonstrates that in privacy technology ecosystems:

Retail memes are whale tools until proven otherwise.

The little guy should:

Recognize the two-layer game being played

Watch for sentiment baiting patterns

Understand bridge agent dynamics

Monitor timing mismatches

Verify narratives against economic realities

This isn’t cynicism—it’s recognizing the emergent properties of complex adaptive systems where economic concentration naturally creates narrative control advantages.

The simulation suggests that in privacy tech specifically, the gap between chaotic enthusiasm and controlled signaling is particularly wide—and particularly exploitable by those who understand both regimes.

Retail participants should therefore approach privacy tech narratives with the understanding that they’re often participating in a game whose rules are written in a language they don’t speak, for purposes they don’t see, benefiting players they don’t know.

Until the evidence clearly demonstrates otherwise, assume the meme you love is someone else’s exit liquidity.

Empirical Observations Explained by the Simulation

The Two Regimes Phenomenon

The simulation demonstrates that crypto-narrative ecosystems naturally bifurcate into two distinct phase spaces:

Chaotic Regime (Low SPI Environment)

Observed empirically: Retail-dominated spaces, meme coin communities, degen Twitter

Simulation explanation: Low structure preservation means narratives lack amplification mechanisms

What happens: Forced memes, simplistic messaging, high noise-to-signal ratio

Outcome: Most narratives fail to gain traction; persistence occurs through incompetence (system can’t filter)

Controlled Regime (High SPI Environment)

Observed empirically: Whale circles, institutional channels, insider networks

Simulation explanation: High structure preservation through economic concentration and narrative control

What happens: Subtle signals, coded language, endorsement hierarchies

Outcome: Narrative persistence through enforcement (system defines reality)

The Bridge Agent Disappearance Effect

Empirical Observation

Moderate positions, balanced messaging, and nuanced narratives naturally vanish in crypto discourse.

Simulation Explanation

Bridge agents (social alignment 30-70%) are penalized in both regimes:

Chaotic regimes: Get lost in noise

Controlled regimes: Suppressed as threats to narrative control

Result: Polarization emerges naturally as moderate positions become evolutionarily unfit

The Corruption-Reward Feedback Loop

Empirical Pattern

Systems that appear “most accurate” often exhibit the highest corruption, yet maintain narrative dominance.

Simulation Mechanism

The model shows how:

Economic concentration increases narrative success probability

Narrative success increases economic concentration further

The resulting high-SPI system appears “accurate” by its own controlled metrics

This creates a self-reinforcing loop where corruption and control increase together

Real-World Example

The Ethereum ICO funding origin story:

Chaotic regime: Dismissed as conspiracy (lacks amplification)

Controlled regime: Known but irrelevant (doesn’t align with economic incentives)

Persistence mechanism: The economically convenient narrative wins

Two-Tiered Communication Strategies

Observed Pattern

Projects often communicate with:

Chaotic-layer messaging: Poetic, ideological, emotional framing

Control-layer signaling: Economic, regulatory, insider-coded language

Simulation Insight

This isn’t accidental but regime-appropriate adaptation:

Projects must speak different languages to different regime participants

The same message fails if delivered to the wrong regime

Successful projects maintain plausible deniability between layers

Example: DarkFi’s Dual Framing

Chaotic narrative: “Neo Dark Age” - poetic, ideological, revolutionary

Control signal: “Cosmopolitan Network States” - institutional, capital-aligned

Effect: Appeals to both regimes without alienating either

The Timing Dimension

Empirical Observation

Signals succeed or fail based on when they’re delivered, not just what they say.

Simulation Finding

Temporal coordination becomes critical because:

Chaotic timing: Aligns with emotional contagion, hype cycles

Control timing: Aligns with capital flows, regulatory windows

Mistiming: Signals to whales during retail euphoria (or vice versa) fail

The Reality Enforcement Mechanism

What’s Observed

In high-correlation environments, what’s “true” becomes what’s economically convenient for the dominant actors.

Why This Happens (Simulation)

High SPI systems:

Control classification metrics (RJF accuracy)

Define success probabilities that favor their narratives

Suppress alternative verification mechanisms (crypto independence)

Create feedback loops where “accuracy” and control reinforce each other

The Asymmetric Understanding Phenomenon

Empirical Pattern

Different participants in the same ecosystem understand the same project completely differently.

Simulation Explanation

This is regime-dependent perception:

Retail/chaotic participants: See surface narratives, emotional appeals

Whale/control participants: See economic signals, regulatory arbitrage, capital deployment

Both are correct within their respective regime’s reality framework

The Inevitable Polarization

Observed Trend

Crypto discourse increasingly lacks moderate positions or balanced perspectives.

Simulation Dynamics

This emerges naturally because:

Bridge agents (moderates) get penalized in both chaotic and controlled regimes

Systems evolve toward either chaotic fragmentation or controlled centralization

The middle ground becomes evolutionarily unstable

Conclusion: What the Simulation Explains

The model provides a formal framework for understanding why we observe:

Two distinct narrative ecosystems coexisting in the same space

Different success metrics for different participant classes

Persistence of economically convenient narratives despite contradictory evidence

Disappearance of moderate positions and balanced perspectives

Effective use of layered communication by successful projects

Critical importance of timing in narrative deployment

Asymmetric understanding of the same projects by different participants

Self-reinforcing corruption-control loops in seemingly successful systems

This isn’t about what should happen, but what does happen according to the simulation’s dynamics—and these dynamics align with empirical observations across cryptocurrency and privacy technology ecosystems.

The simulation suggests these patterns aren’t accidental but emergent properties of complex adaptive systems navigating multi-dimensional power terrains with competing optimization pressures.

Counter-Intuitive Insights from the Adaptive Preference Navigation Simulation

The Core Paradox: Accuracy ≠ Truth, Stability ≠ Resilience

1. The High-Control Vulnerability Paradox

Counter-intuitive finding: Systems with highest apparent “accuracy” (97%+ RJF classification) are the most vulnerable to catastrophic failure.

Why? Because their “accuracy” is achieved through:

Narrative control rather than truth-seeking

Suppression of information diversity (low crypto dimension)

Centralized economic power (high corruption)

Surprising implication: The systems that appear to work “best” by their own metrics are actually brittle authoritarian regimes waiting for a black swan event to shatter their controlled reality.

2. The Bridge Agent Dilemma

Counter-intuitive finding: Bridge agents (moderate social positions) are penalized in all regimes, even though they increase system resilience (SPI).

In the simulation:

Low SPI regimes: Bridge agents get trapped in chaos

High SPI regimes: Bridge agents are suppressed as threats to control

Their SPI bonus (+20%) is offset by reduced action success rates

Surprising implication: Moderation naturally disappears in both chaotic and controlled systems, creating a self-reinforcing polarization dynamic.

New Insights into Influence Operations & Mimetic Warfare

3. Two Distinct Mechanisms of Narrative Persistence

Traditional models assume narratives persist due to either:

Truth/value (rational choice)

Social contagion (network effects)

This simulation reveals two new persistence mechanisms:

A. Chaotic Persistence (Low SPI)

Narratives persist because nothing works

No effective correction mechanisms exist

“Garbage in, garbage stays” - the system lacks the capability to filter

B. Controlled Persistence (High SPI)

Narratives persist because the system enforces them

High “accuracy” is achieved by defining alternative narratives as “inaccurate”

Reality becomes what the system says it is

4. The Corruption-Feedback Loop

The model reveals a non-linear relationship between corruption and system behavior:

High Economic Power →

Increased Narrative/Corruption Success →

Reduced Crypto Independence →

Increased SPI (through narrative control) →

Further Economic Concentration →

Brittle Centralized ControlSurprising insight: Corruption can increase “system performance” metrics (SPI, RJF accuracy) while decreasing actual resilience.

Novel Contributions to PsyOps Theory

5. The Three-Phase Attack Model

The simulation suggests effective influence operations should:

Phase 1: Chaotic Disruption (Low SPI)

Flood system with contradictory narratives

Overwhelm classification capacity

Create chaotic persistence of multiple narratives

Phase 2: Controlled Narrative (High SPI)

Impose preferred narrative with apparent “accuracy”

Suppress crypto/independent verification

Create controlled persistence of single narrative

Phase 3: Black Swan Trigger

Introduce event that breaks controlled narrative

System has no resilience (brittle) or chaotic correction (incompetent)

Catastrophic cascade across all 6 dimensions

6. The Importance of Timing (Temporal Dimension)

The simulation highlights temporal coordination as critical for:

Narrative control (when to release information)

Economic manipulation (when to concentrate capital)

Social alignment (when to activate bridge agents)

Counter-intuitive: In high-control regimes, temporal coordination becomes more important than content accuracy.

New Understanding of Mimetic Warfare

7. Memes Don’t Win Wars - System States Do

Traditional mimetic warfare focuses on creating better/stronger memes.

This simulation shows: Memes that succeed are those that fit the system state, not necessarily “better” ones.

In chaotic systems: Simple, emotionally resonant memes persist

In controlled systems: Officially sanctioned memes persist

The same meme might fail in one regime and dominate in another

8. The Six-Dimensional Battlefield

The model expands mimetic warfare beyond just narrative/content to include:

Economic dimension: Capital concentration enables narrative amplification

Social dimension: Bridge agents can be targeted or co-opted

Crypto dimension: Independent verification can be suppressed or corrupted

Network dimension: Position matters more than content

Temporal dimension: Timing of releases creates cascades

Surprising insight: Winning the economic dimension may be more important than winning the narrative dimension - economic power directly increases narrative success probability.

General Implications for Complex Adaptive Systems

9. The Optimal Point is Unstable

The simulation suggests there’s an unstable equilibrium at moderate SPI (Class S: 0.2-0.8 SPI) where:

Some bridge agents exist

Moderate corruption levels

Reasonable classification accuracy

But systems naturally evolve toward:

Low SPI chaotic states (through corruption feedback)

High SPI controlled states (through narrative enforcement)

Implication: Self-organizing systems naturally become vulnerable without external interventions to maintain balance.

10. The Measurement Problem

The simulation demonstrates that the metrics we use to measure system health can become tools of control:

SPI measures “structure preservation” - but structure can be preserved through authoritarian control

RJF accuracy measures “classification accuracy” - but the classifier can be trained on controlled data

Corruption measures economic concentration - but this can be framed as “efficiency”

Profound insight: The most dangerous systems are those that appear healthiest by their own metrics.

Conclusion: A New Framework for Understanding Influence

This simulation provides a formal, testable framework that reveals:

Narratives persist through system alignment, not inherent quality

“Accurate” systems can be more vulnerable than “inaccurate” ones

Corruption and control are two sides of the same vulnerability

Moderate positions naturally disappear in evolving systems

Influence operations should target system states, not just content

The model suggests that effective influence operations should:

First understand the current system state (SPI, corruption, bridge agent concentration)

Choose tactics that align with that state (chaotic vs controlled approaches)

Manipulate multiple dimensions simultaneously (not just narrative)

Create feedback loops that push the system toward vulnerability

Time interventions to maximize cascading effects

Most importantly: The simulation shows why truth doesn’t always win - and why systems can persist in false narratives through either chaotic incompetence or controlled enforcement, both of which are naturally emerging properties of complex adaptive systems navigating 6-dimensional power terrains.

This represents a paradigm shift in understanding influence operations - from focusing on content and networks to understanding system states and multidimensional feedback loops. The battlefield isn’t just in minds or networks, but in the evolving landscape of economic, social, crypto, network, narrative, and temporal dimensions - and the complex interactions between them.

What’s Modelled in the Jupyter Notebook: A Technical Breakdown

Core System Architecture

1. The 6D Power Terrain

The simulation models a six-dimensional state space where agents navigate:

Economic Power (state[0]) - Capital concentration, market influence

Narrative Control (state[1]) - Information dominance, storytelling capacity

Social Alignment (state[2]) - Position in social spectrum (0-1 scale)

Crypto Strength (state[3]) - Independent verification, cryptographic sovereignty

Network Position (state[4]) - Structural centrality, connection strength

Temporal Coordination (state[5]) - Timing, synchronization capability

Each agent maintains a position in this 6D space as a normalized vector [0,1]⁶.

2. Strategy Archetypes

Six predefined strategy types with distinct target vectors and SPI targets:

StrategyEconomicNarrativeSocialCryptoNetworkTemporalSPI TargetColorSovereignty0.950.300.200.900.600.400.15BlueNarrative0.700.950.600.300.800.900.90RedBridge0.600.700.500.400.900.700.50OrangeCrypto0.500.300.100.950.400.300.50GreenNetwork0.800.700.600.300.950.800.50PurpleBalanced0.700.600.500.500.700.600.50Gray3. Agent Adaptation Engine

Each AdaptiveAgent has:

State Navigation:

Available Actions: Six possible moves (boost economic, amplify narrative, shift social, strengthen crypto, improve network, optimize timing)

Action Effects: Each action modifies specific dimensions with trade-offs

Success Calculation: Based on strategy alignment, corruption level, bridge status

Decision Making: Heuristic A*-inspired value calculation balancing improvement vs cost

Adaptive Learning:

Success Memory: Tracks last 10 attempts per action type

Exploration Rate: 30% chance to discount costs (encourage exploration)

Learning Rate: 0.1-0.3 random variation per agent

4. Key Metrics & Calculations

Structure Preservation Index (SPI):

SPI = base_spi + pattern_bonus

base_spi = 1.0 - (crypto_strength × 0.7)

if is_bridge: base_spi ×= 1.2

pattern_bonus = (0.1 × narrative) + (0.05 × temporal)RJF Classification Accuracy:

Piecewise linear mapping from SPI:

SPI < 0.2: 30-35% accuracy

SPI 0.2-0.8: 76-90% accuracy (linear interpolation)

SPI > 0.8: 97-100% accuracy

Fitness Calculation:

state_fitness = 1.0 - mean_absolute_distance_to_target

spi_fitness = 1.0 - abs(current_SPI - target_SPI)

total_fitness = 0.7 × state_fitness + 0.3 × spi_fitnessCorruption Level:

python

corruption = count(agents with economic_power > 0.7) / total_agentsBridge Status:

Agents with social alignment between 0.3 and 0.7 are “bridges”

5. Team Dynamics System

Team Formation:

Agents with fitness ≤ 0.7 and no team can join

20% chance per round to attempt team formation

Teams limited to 5 members, max 10 teams total

Formed based on social alignment similarity (±0.3)

Team Dissolution:

Dissolve if < 2 members

Minimum 5-round lifespan

Dissolve if performance plateaus (< 0.02 change in last 5 vs previous 5)

Dissolve if homogeneous (single strategy) and low performance (< 0.4 average)

Team Fitness:

team_fitness = avg(member_fitness) × (1 + diversity_bonus)

diversity_bonus = min(0.3, unique_strategies × 0.05)6. Simulation Engine

Round Execution:

Calculate system-level corruption and bridge percentage

Each agent adapts (chooses and executes action)

Form new teams

Update existing teams, dissolve underperforming

Collect metrics

Initialization:

25 agents by default (configurable)

60 rounds by default (configurable)

Strategy distribution: 20% each for first 5, 10% Balanced (configurable)

Random state initialization near strategy-specific distributions

7. Visualization & Analysis Suite

System Metrics Dashboard:

Average fitness over time

Corruption level evolution

Bridge agent percentage

Active team count

Average SPI trajectory

Average RJF accuracy

Strategy-specific fitness comparisons

Agent State Visualizations:

2D projections of 6D state space

Economic vs Narrative

Social vs Crypto

Network vs Temporal

SPI vs Fitness

Correlation Analysis:

Corruption vs bridge percentage regression

Strategy performance by corruption level comparison

Final fitness by strategy distribution

What Makes This Model Novel

Technical Innovations:

Multi-dimensional fitness landscape with competing optimization targets

Endogenous corruption dynamics that influence action success probabilities

Bridge agent mechanics that create non-linear system effects

Team formation with emergent coordination patterns

SPI regime classification (Class H/S/T) with different failure modes

System Properties Modelled:

Adaptive preference formation - agents evolve toward what succeeds, not what’s “true”

Path dependency - initial conditions and early random events shape long-term evolution

Multiple equilibria - different stable system states possible from same starting point

Phase transitions - sudden shifts between chaotic/controlled regimes

Tragedy of the commons in information quality - individual optimization degrades collective truth-seeking

Emergent Phenomena Captured:

Polarization dynamics - moderate positions naturally disappear

Corruption feedback loops - success begets more of the same behavior

Narrative lock-in - once a narrative dominates, it reshapes success metrics to favor itself

Black swan vulnerability - both chaotic and controlled systems vulnerable, but differently

Team emergence/dissolution - coordination patterns that form and break based on performance

Implementation Details

Code Structure:

1. IMPORTS & SETUP

2. CORE DATA STRUCTURES (Strategy dataclass)

3. AGENT CLASS (AdaptiveAgent with 400+ lines)

4. TEAM CLASS (Team with coordination logic)

5. SIMULATION SYSTEM (AdaptiveSystem with round execution)

6. VISUALIZATION FUNCTIONS (plotting utilities)

7. RUN SIMULATION (demo execution with analysis)Key Algorithms:

Agent decision-making: Simplified A* heuristic with exploration

Success calculation: Multi-factor probability with corruption modifiers

SPI regime classification: Threshold-based (0.2, 0.8 boundaries)

Team dissolution: Performance plateau detection

Configuration Points:

Number of agents (default: 25)

Number of rounds (default: 60)

Strategy distribution (configurable dictionary)

Random seeds (42 for reproducibility)

Visualization styles (darkgrid with husl palette)

What This Model Demonstrates

This is not a traditional network model or opinion dynamics simulation. It’s a multi-dimensional adaptive landscape navigation model that captures:

How metrics become weapons - SPI and RJF can measure control, not truth

Why moderate positions vanish - bridge agents penalized in both chaotic and controlled regimes

How corruption self-reinforces - economic power increases narrative success probability

Why narratives persist - through either chaotic persistence or controlled enforcement

The vulnerability of “accurate” systems - high SPI systems can be brittle authoritarian regimes

The model provides a formal, testable framework for understanding complex adaptive systems where:

Agents have competing objectives (state fitness vs SPI fitness)

Success is endogenous - defined by the system state itself

Multiple equilibria exist with different properties

Phase transitions occur between different system regimes

This represents a significant advancement beyond traditional models of information cascades, opinion dynamics, or network effects by incorporating multi-dimensional state spaces, endogenous success metrics, and adaptive strategy evolution in a single cohesive simulation framework.

Jupyter Notebook Mathematics & Code for Replication

1. Core Data Structures & Initialization

Strategy Definition

python

DIMENSIONS = [”Economic”, “Narrative”, “Social”, “Crypto”, “Network”, “Temporal”]

@dataclass

class Strategy:

name: str

target: np.ndarray # 6D target vector ∈ [0,1]^6

spi_target: float # Target SPI ∈ [0,1]

color: str

STRATEGIES = {

“Sovereignty”: Strategy(name=”Sovereignty”, target=[0.95, 0.30, 0.20, 0.90, 0.60, 0.40], spi_target=0.15, color=”blue”),

“Narrative”: Strategy(name=”Narrative”, target=[0.70, 0.95, 0.60, 0.30, 0.80, 0.90], spi_target=0.90, color=”red”),

“Bridge”: Strategy(name=”Bridge”, target=[0.60, 0.70, 0.50, 0.40, 0.90, 0.70], spi_target=0.50, color=”orange”),

“Crypto”: Strategy(name=”Crypto”, target=[0.50, 0.30, 0.10, 0.95, 0.40, 0.30], spi_target=0.50, color=”green”),

“Network”: Strategy(name=”Network”, target=[0.80, 0.70, 0.60, 0.30, 0.95, 0.80], spi_target=0.50, color=”purple”),

“Balanced”: Strategy(name=”Balanced”, target=[0.70, 0.60, 0.50, 0.50, 0.70, 0.60], spi_target=0.50, color=”gray”)

}Agent State Initialization

Each agent starts with a random state near its strategy’s target distribution:

For strategy S, initial state x = [x₀, x₁, x₂, x₃, x₄, x₅] where:

Sovereignty: x₀ ~ U(0.6,0.8), x₁ ~ U(0.2,0.4), x₂ ~ U(0.1,0.3), x₃ ~ U(0.7,0.9), x₄ ~ U(0.4,0.6), x₅ ~ U(0.3,0.5)

Narrative: x₀ ~ U(0.5,0.7), x₁ ~ U(0.7,0.9), x₂ ~ U(0.5,0.7), x₃ ~ U(0.2,0.4), x₄ ~ U(0.6,0.8), x₅ ~ U(0.7,0.9)

Bridge: x₀ ~ U(0.4,0.6), x₁ ~ U(0.5,0.7), x₂ ~ U(0.3,0.7), x₃ ~ U(0.3,0.5), x₄ ~ U(0.7,0.9), x₅ ~ U(0.5,0.7)

Crypto: x₀ ~ U(0.2,0.4), x₁ ~ U(0.1,0.3), x₂ ~ U(0.1,0.3), x₃ ~ U(0.7,0.9), x₄ ~ U(0.3,0.5), x₅ ~ U(0.2,0.4)

Network: x₀ ~ U(0.5,0.7), x₁ ~ U(0.5,0.7), x₂ ~ U(0.4,0.6), x₃ ~ U(0.2,0.4), x₄ ~ U(0.7,0.9), x₅ ~ U(0.5,0.7)

Balanced: x₀ ~ U(0.5,0.7), x₁ ~ U(0.4,0.6), x₂ ~ U(0.3,0.5), x₃ ~ U(0.4,0.6), x₄ ~ U(0.5,0.7), x₅ ~ U(0.4,0.6)2. Key Mathematical Functions

Structure Preservation Index (SPI)

For agent with state vector x = [x₀, x₁, x₂, x₃, x₄, x₅]:

base_SPI = 1.0 - (x₃ × 0.7) # Crypto strength reduces SPI

if 0.3 ≤ x₂ ≤ 0.7: # Bridge position bonus

base_SPI *= 1.2

pattern_bonus = (0.1 × x₁) + (0.05 × x₅) # Narrative + Temporal bonus

SPI = clip(base_SPI + pattern_bonus, 0.1, 0.95)RJF Classification Accuracy

Piecewise linear mapping from SPI:

if SPI < 0.2:

RJF_acc = 0.30 + (SPI / 0.2) × 0.05

= 0.30 + 0.25 × SPI

Range: [0.30, 0.35]

if 0.2 ≤ SPI < 0.8:

RJF_acc = 0.76 + ((SPI - 0.2) / 0.6) × 0.14

= 0.7233 + 0.2333 × SPI

Range: [0.76, 0.90]

if SPI ≥ 0.8:

RJF_acc = 0.97 + ((SPI - 0.8) / 0.2) × 0.03

= 0.85 + 0.15 × SPI

Range: [0.97, 1.00]Agent Fitness

For agent with strategy S having target vector t = [t₀, t₁, t₂, t₃, t₄, t₅] and target SPI τ:

state_fitness = 1.0 - (1/6) × Σ|xi - ti| # Manhattan distance normalized

spi_fitness = 1.0 - |SPI - τ|

total_fitness = 0.7 × state_fitness + 0.3 × spi_fitness

fitness ∈ [0, 1]Heuristic Distance (Weighted Manhattan)

weights = [0.20, 0.15, 0.15, 0.20, 0.15, 0.15]

distance = Σ wᵢ × |xi - ti|

if 0.3 ≤ x₂ ≤ 0.7: # Bridge agents get social distance reduction

distance = Σ wᵢ × |xi - ti| for i ≠ 2 + (0.7 × w₂ × |x₂ - t₂|)3. Action System Mathematics

Available Actions (6 total):

Each action a has:

Name

Effect function fₐ: ℝ⁶ → ℝ⁶

Base cost cₐ

Affected dimensions Dₐ

1. BOOST_ECONOMIC:

fₐ(x) = [min(1, x₀+0.1), max(0, x₁-0.02), x₂, x₃, x₄, x₅]

cₐ = 1.0, Dₐ = {0}

2. AMPLIFY_NARRATIVE:

fₐ(x) = [x₀, min(1, x₁+0.15), min(1, x₂+0.05), max(0, x₃-0.03), min(1, x₄+0.04), min(1, x₅+0.06)]

cₐ = 1.2, Dₐ = {1}

3. SHIFT_SOCIAL:

fₐ(x) = [x₀, x₁, clip(x₂ + U(-0.15, 0.15), 0, 1), x₃, x₄, x₅]

cₐ = 1.5, Dₐ = {2}

4. STRENGTHEN_CRYPTO:

fₐ(x) = [max(0, x₀-0.02), x₁, x₂, min(1, x₃+0.12), x₄, x₅]

cₐ = 1.3, Dₐ = {3}

5. IMPROVE_NETWORK:

fₐ(x) = [min(1, x₀+0.03), min(1, x₁+0.02), min(1, x₂+0.03), x₃, min(1, x₄+0.08), min(1, x₅+0.02)]

cₐ = 1.1, Dₐ = {4}

6. OPTIMIZE_TIMING:

fₐ(x) = [x₀, x₁, x₂, x₃, x₄, min(1, x₅+0.15)]

cₐ = 1.4, Dₐ = {5}Action Success Probability

Base success s₀ = 0.7

Modifiers:

1. Strategy-specific:

if strategy = Sovereignty and action = STRENGTHEN_CRYPTO: s₀ *= 1.2

if strategy = Narrative and action = AMPLIFY_NARRATIVE: s₀ *= 1.3

2. Bridge penalty:

if agent.is_bridge and action = SHIFT_SOCIAL: s₀ *= 0.6

3. Corruption effect (corruption level C ∈ [0,1]):

if C > 0.5:

if action ∈ {BOOST_ECONOMIC, AMPLIFY_NARRATIVE}: s₀ *= 1.2

if action = STRENGTHEN_CRYPTO: s₀ *= 0.8

Success probability = min(0.95, s₀)Historical Success Rate

For action a with history Hₐ = [h₁, h₂, ..., hₙ] where hᵢ ∈ {0,1}:

rₐ = mean(Hₐ[-5:]) if len(Hₐ) ≥ 5 else mean(Hₐ) if Hₐ else 0.7Action Value Calculation (Simplified A heuristic)*

For action a at state x:

x’ = fₐ(x) # Projected new state

d = heuristic_distance(x, t)

d’ = heuristic_distance(x’, t)

improvement = d - d’ # Lower distance is better

adjusted_cost = cₐ × (1.5 - rₐ)

if exploration (probability ε = 0.3): adjusted_cost *= 0.8

if adjusted_cost > 0:

value = improvement / adjusted_cost

else:

value = improvement4. System-Level Metrics

Corruption Level

C = (1/N) × Σᵢ I(xᵢ₀ > 0.7)

where I(condition) = 1 if true, 0 otherwise

N = total agentsBridge Percentage

B = (1/N) × Σᵢ I(0.3 ≤ xᵢ₂ ≤ 0.7)SPI Regime Classification

if SPI_avg < 0.2: Class H (High Corruption, Low Accuracy)

if 0.2 ≤ SPI_avg < 0.8: Class S (Stable Regime)

if SPI_avg ≥ 0.8: Class T (Total Control)5. Team Dynamics Mathematics

Team Formation

Let A be set of agents with:

fitness ≤ 0.7

no current team

For each agent a ∈ A:

if random() < 0.2: # 20% chance to attempt team formation/joining

# Try to join existing team T with social alignment similarity

if ∃T such that |x_a₂ - μ_T₂| ≤ 0.3 and |T| < 5:

join T

else if total_teams < 10:

create new team {a}where μ_T₂ = average social dimension of team T members.

Team Fitness

For team T with members M:

fᵢ = fitness of member i

k = |{distinct strategies in M}|

diversity_bonus = min(0.3, k × 0.05)

team_fitness = (1/|M|) × Σ fᵢ × (1 + diversity_bonus)Team Dissolution Conditions

Team T dissolves if:

|M| < 2

Current_round - creation_round < 5 (minimum lifespan)

Performance plateau (last 10 rounds):

recent_avg = mean(fitness_history[-5:])

previous_avg = mean(fitness_history[-10:-5])

if |recent_avg - previous_avg| < 0.02: dissolveHomogeneous and low-performing:

if k = 1 and mean(fitness_history[-5:]) < 0.4: dissolve6. Core Simulation Loop

Initialize:

N agents with strategies per distribution

Round = 0

For each round r = 0 to R-1:

# Calculate system metrics

C = calculate_corruption()

B = calculate_bridge_percentage()

# Agent adaptation

For each agent i:

a, v = choose_best_action(C)

if a ≠ None:

p = calculate_action_success(a.name, C)

if random() < p:

x_i = clip(fₐ(x_i), 0, 1) # Success

else:

x_i = clip(x_i + N(0, 0.05, 6), 0, 1) # Failure: random perturbation

Update success history Hₐ

Update SPI, fitness, RJF accuracy

Record state history

# Team dynamics

form_teams()

update_teams()

# Record metrics

metrics[”round”].append(r)

metrics[”avg_fitness”].append(mean(fitnesses))

metrics[”corruption”].append(C)

metrics[”bridge_percentage”].append(B)

metrics[”team_count”].append(active_teams)

metrics[”avg_spi”].append(mean(SPIs))

metrics[”avg_rjf_accuracy”].append(mean(RJFs))

Round += 17. Visualization Calculations

2D Projections

1. Economic vs Narrative: (x₀, x₁) with point size ∝ fitness × 100

2. Social vs Crypto: (x₂, x₃) with point size ∝ fitness × 100

3. Network vs Temporal: (x₄, x₅) with point size ∝ fitness × 100

4. SPI vs Fitness: (SPI, fitness) with point size = 100Correlation Analysis

For corruption vs bridges scatter plot:

x = C_t (corruption at time t)

y = B_t (bridge percentage at time t)

Linear regression: y = β₀ + β₁x + ε8. Critical Parameters for Replication

python

# Fixed parameters

np.random.seed(42)

random.seed(42)

N_AGENTS = 25

N_ROUNDS = 60

EXPLORATION_RATE = 0.3

# Action parameters (base costs, effects)

ACTION_PARAMS = {

“BOOST_ECONOMIC”: {”delta”: [0.1, -0.02, 0, 0, 0, 0], “cost”: 1.0},

“AMPLIFY_NARRATIVE”: {”delta”: [0, 0.15, 0.05, -0.03, 0.04, 0.06], “cost”: 1.2},

“SHIFT_SOCIAL”: {”delta_range”: [-0.15, 0.15], “cost”: 1.5},

“STRENGTHEN_CRYPTO”: {”delta”: [-0.02, 0, 0, 0.12, 0, 0], “cost”: 1.3},

“IMPROVE_NETWORK”: {”delta”: [0.03, 0.02, 0.03, 0, 0.08, 0.02], “cost”: 1.1},

“OPTIMIZE_TIMING”: {”delta”: [0, 0, 0, 0, 0, 0.15], “cost”: 1.4}

}

# SPI calculation parameters

CRYPTO_PENALTY = 0.7

BRIDGE_BONUS = 1.2

NARRATIVE_BONUS = 0.1

TEMPORAL_BONUS = 0.05

# Fitness weights

STATE_WEIGHT = 0.7

SPI_WEIGHT = 0.3

# Heuristic distance weights

DISTANCE_WEIGHTS = [0.20, 0.15, 0.15, 0.20, 0.15, 0.15]

BRIDGE_SOCIAL_DISCOUNT = 0.7

# Success probability parameters

BASE_SUCCESS = 0.7

SUCCESS_CAP = 0.95

CORRUPTION_THRESHOLD = 0.5

CORRUPTION_MODIFIERS = {

“BOOST_ECONOMIC”: 1.2,

“AMPLIFY_NARRATIVE”: 1.2,

“STRENGTHEN_CRYPTO”: 0.8

}

BRIDGE_SOCIAL_PENALTY = 0.6

STRATEGY_MODIFIERS = {

(”Sovereignty”, “STRENGTHEN_CRYPTO”): 1.2,

(”Narrative”, “AMPLIFY_NARRATIVE”): 1.3

}

# Team parameters

MAX_TEAM_SIZE = 5

MAX_TEAMS = 10

TEAM_FORMATION_PROB = 0.2

MIN_TEAM_LIFESPAN = 5

DISSOLUTION_PLATEAU_THRESHOLD = 0.02

LOW_PERFORMANCE_THRESHOLD = 0.4

DIVERSITY_BONUS_PER_STRATEGY = 0.05

MAX_DIVERSITY_BONUS = 0.39. Key Statistical Relationships

Correlation Structure

1. Crypto Strength → SPI: β = -0.7

2. Bridge Status → SPI: multiplier = 1.2

3. Narrative → SPI: β = 0.1

4. Temporal → SPI: β = 0.05

5. Economic Power > 0.7 → Corruption increment = 1/N

6. Social ∈ [0.3, 0.7] → Bridge status = TrueEmergent Dynamics

SPI = 1.0 - 0.7×Crypto + 0.2×I(Bridge)×(1 - 0.7×Crypto) + 0.1×Narrative + 0.05×Temporal + ε

where I(Bridge) = 1 if 0.3 ≤ Social ≤ 0.7 else 0

RJF_acc = g(SPI) where g is piecewise linear as defined

fitness = 0.7×(1 - mean|xi - ti|) + 0.3×(1 - |SPI - τ|)10. Complete State Transition Function

The full system can be represented as:

For agent i at time t with state x_i(t):

1. Observe corruption C(t) = (1/N)Σ_j I(x_j₀(t) > 0.7)

2. Choose action a_i(t) = argmax_a V(x_i(t), a, C(t))

where V(x, a, C) = [d(x, t) - d(f_a(x), t)] / [c_a × (1.5 - r_a) × (1 - 0.2×I_explore)]

3. Execute action with probability p = min(0.95, s_a(x, C))

If success: x_i(t+1) = clip(f_a(x_i(t)), 0, 1)

If failure: x_i(t+1) = clip(x_i(t) + ξ, 0, 1), ξ ~ N(0, 0.05²I₆)

4. Update SPI, RJF, fitness

5. Update team membership based on social alignment and performance

System evolves as Markov process with memory (success histories, team histories)This complete mathematical specification should enable exact replication of the simulation. The key innovations are in the multi-dimensional state space, endogenous success metrics, bridge agent mechanics, and team dynamics with diversity bonuses.

Until next time, TTFN.

Impressive modeling of how corruption-feedback loops create self-reinforcing narrative control. The distiction between chaotic persistence (system can't filter) and controlled persistence (system enforces) is something I hadn't seen formalized before. What really stood out was the bridge agent squeeze dynamic, how moderate positions get penalized in both low and high SPI regimes which explains alot about why nuanced takes vanish from these ecosystems. The emergent polarization isn't a bug it's a feature of the system architecture.

Intelligent.

you are intelligent that is for certain.

this is indicative of explanation overload

as intelligent as you are- you could easily teach this with less than half the words.